Boosting & Bagging

In this post we discuss boostrap sampling and the bagging framework. And many of its applications.

Boostrap Sampling & Bagging

- bootstrap sampling {may sample the same sample}

- bootstrap sampling under bagging framework {take multiple samples to train individual classifier}

Boost

There’re multiple boosting technique out there. Adaboost, GBDT (Mart), XGBoost, lightBGM. This note covers Adaboost for the time being.

Adaboost

- weak classifier put under bagging framework

- everything combined –> ensemble learning

- weighted weak classifier, training sampling weighting –> adaboosting

- adaboosting induction

- general weighted function for prediction

- make use of exponetial function $e^{-Y\cdot f(X)}$ for comparing similarity {reason for using this is because it has better performance than l2 loss in classification?}

- link for induction

- core idea: two phase

- math:

- phase 1:

- keep the weights for the samples, train the current weak classifier, computes the weighted error for the current classifier, use the error to compute the weight for the current weak classifier.

- phase 2:

- use the weight of the current the weak classifier to update the weights for the samples.

- math:

- application: face detection, before nn was fully applicable, make use of Haar features

Decision Tree & Random Forest

- adaboosting induction

- weak classifier made up with decision tree –> random forest (simple vote, no weights changing)

- decision tree

- core idea: search all feature space to find one feature that achieves maximum information gain.

- $\max E_{gain} = \max_{features} (E_{original} - E_{split})$ in another word, maximizes the entropy gain is the same as minimizes the impurity.

- classification {measures with entropy gain, gini purity, miss-classification}

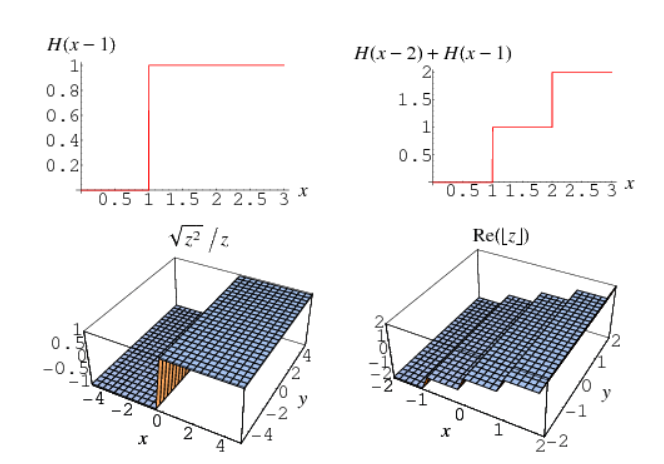

- regression {measures with l2 loss, mapping of piecewise constant function}

since it is piecewise constant function, if we take a decesion tree and seperate it small enough, in theory it can simulate any non-linear function.

since it is piecewise constant function, if we take a decesion tree and seperate it small enough, in theory it can simulate any non-linear function.

- drawbacks of decision tree

- as long as the depth of the tree is deep enough, we can achieve very high precision in the test set. However when the feature dimension is too high, the “curse of dimension” may happen and the model will overfit.

- complex trimming technique, it’s like tuning hyper-parameters. Many methods exist, the common one used in classifiying is Cost-Complexity Pruning (CCP).

- Using decision tree under the bagging framework, is called the Random Forest

- each tree is a weak classifier, with merely 50% accucray is enough

- each tree is made with random sample

- also the feature for composing the tree is randomly selected

- multiple decision tree compared with single decision tree effectively reduces the variance.

- decision tree

- xgboost, see “More about Decision Tree”